Tabular RL for Value Prediction

The value Prediction Problem

Given a policy

Why is it useful? Recall that if we know how to compute

On-policy learning: data is generated by

off-policy learning: data is generated by some other policy

When action is always chosen by a fixed policy, the MDP reduces to a Markov chain plus a reward function over states, also known as Markov Reward Processes (MRP)

Monte-Carlo Value Prediction

If we can roll out trajectories from any starting state that we want, here is a simple procedure

For each

- For episodic tasks, roll out until termination

- For continuing tasks, roll out to a length (typically

- Let

Also works if we can draw starting state from an exploratory initial distribution (i.e., one that assigns non-zero probability to every state)

Keep generating trajectories until we have enough data points for each starting state

Implementing MC in an online manner

- The previous procedure assumes that we collect all the data, store them, and then process them (batch-mode learning)

- Can we process each data point as they come, without ever needing to store them? (online, one-pass algorithm)

More generally,

in theory, convergence require sum of

The expected value of the target is what we want to update our estimate to, but since it’s noisy, we only move slightly to it

Alternative expression:

Moving the estimate in the direction of error (= target - current)

Can be interpreted as stochastic gradient descent

If we have i.i.d. real random variables

- Stochastic gradient:

Every-visit Monte-Carlo

Supose we have a continuing task. What if we cannot set the starting state arbitrarily?

Let’s say we only have one single long trajectory

(By long trajectory, we mean trjectory length >> effective horizon

On-policy:

Algorithm: for each

Convergence requires additional assumptions: the Markov chain induced by

Every-visit Monte-Carlo

You can use this idea to improve the algorithm when we can choose the starting state & the MDP is episodic

i.e., obtain a random return for each state visited on the trajectory

What if a state occurs multiple times on a trajectory?

Approach 1: only the 1st occurrence is used (first-visit MC)

Approach 2: all of them are used (every-visit MC)

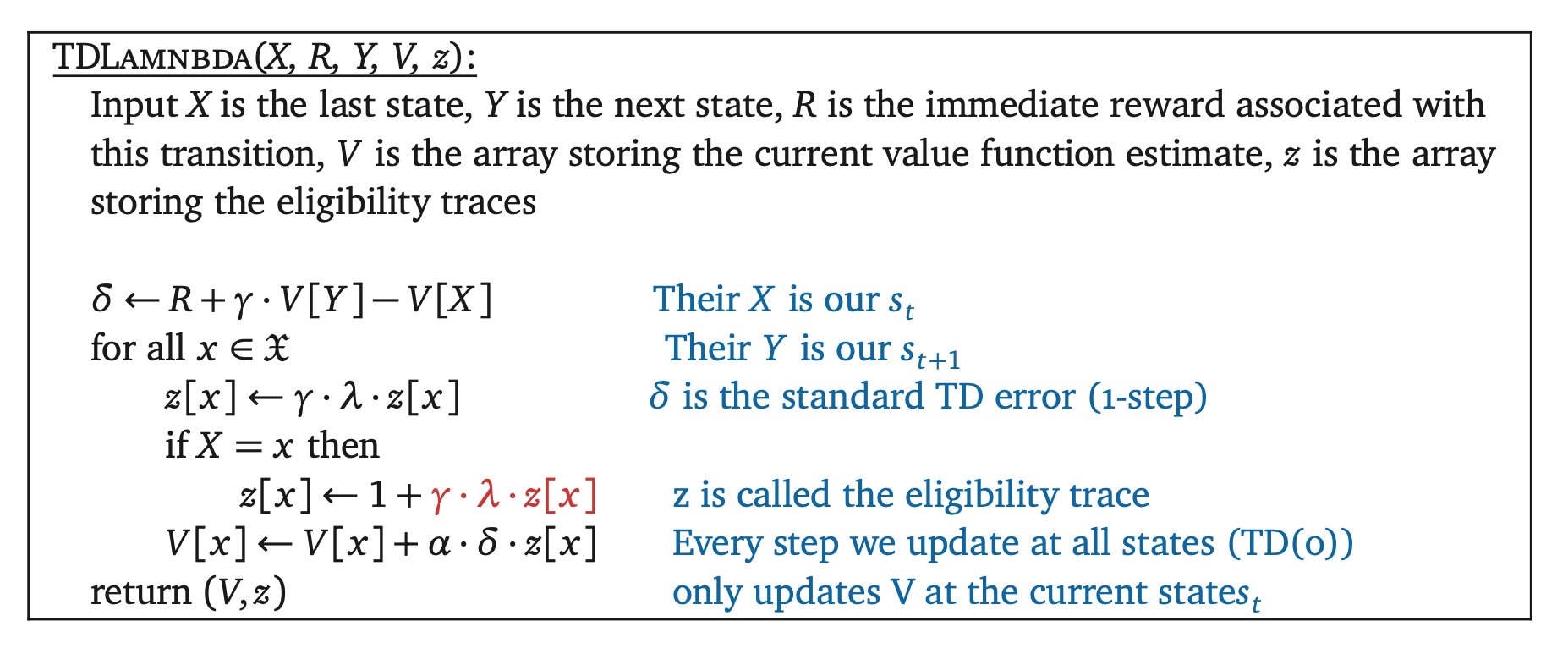

Alternative Approach: TD(0)

- Again suppose we have a single long trajectory

in a contunuing task

TD = temperal difference

The same structure as the MC update rule, except that we are using a different targert here:

Often callled bootstrapped target: the target value depends on our current estimated value function

Conditioned on

It’s

Understanding TD(0)

Imagine a slightly different procedure

Initialize

Keep running

Note that only

What’s the relationship between

Copy

TD(0): almost the same, except that we don’t wait. Copy

Algorithms that wait acutally have a come back in Deep RL

TD(0) vs MC

TD(0) target:

MC target:

MC target is unbaised: expectation of target is the

TD(0) target is baised (w.r.t.

Although the expected target is not

On the other hand, TD(0) has lower variance than MC

Bias vs variance trade-off

Also a ptractical concern: when interval of a time step is too small (e.g., in robotics),

1-step bootstrap (= TD(0)):

2-step bootstrap:

3-step bootstrap:

Excerise: What’s the exptected target in n-step bootstrap?

limit

Why the choice of

Enables efficient online implementation

Backward view of